MCP Servers are currently the new hipster thing to do in the LLM landscape, and for good reason. No longer do you have this local LLM that solely generates responses, you can actually feed it with real data from your documentation, databases, wiki’s, Jira environments and it can even run commands directly on your systems. What could possibly go wrong?

Guide

This guide is a step by step guide to get started with MCP/OpenAPI servers used together with Open-WebUI and ollama. While following the documentation of MCP and Open-WebUI, I ran into some issues that I will try to address in this guide. The goal of this guide is to get you started with running a local LLM with the MCP server and Open-WebUI. This guide is not meant to be a full tutorial on how to use the MCP server or Open-WebUI. If you want to know more about open-webui, ollama or MCP servers, then there are much better guides out there.

Prerequisites

- ollama and open-webui installed and running

- uv installed

- models downloaded

Setup MCP servers

First we need to install the MCP server, open-webui does not use MCP directly. It uses an OpenAPI proxy to communicate with the MCP servers. To run this proxy we will be using uv, to download and run this we will first create the configuration file. In this we set the commands, arguments and names of the servers we want to run.

First setup a venv with uv:

uv venv

source .venv/bin/activate

uv init

Create the file called config.json

{

"mcpServers": {

"memory": {

"command": "npx",

"args": ["-y", "@modelcontextprotocol/server-memory"]

},

"time": {

"command": "uvx",

"args": ["mcp-server-time", "--local-timezone=Europe/Amsterdam"]

},

}

}

After this configuration file is created, we can run the server. You can run the server with the command:

uvx mcpo --host 0.0.0.0 --port 8000 --config ./config.json

This will start the server and you can check if it is running by going to http://localhost:8000/docs. This should serve the OpenAPI Proxy documentation and list the available tools. Memory and time will be listed.

Setup Open-WebUI

Next we will configure these Tools in the OpenWebUI interface. There are two places you can configure the tools.

Admin interface

The Admin interface allows to setup these tools for each user in your Open-WebUI environment. If you have a multitenant environment this is a great way to expose these tools. Unfortunately in the latest version these tools are not enabled by default. You need to enable them per model.

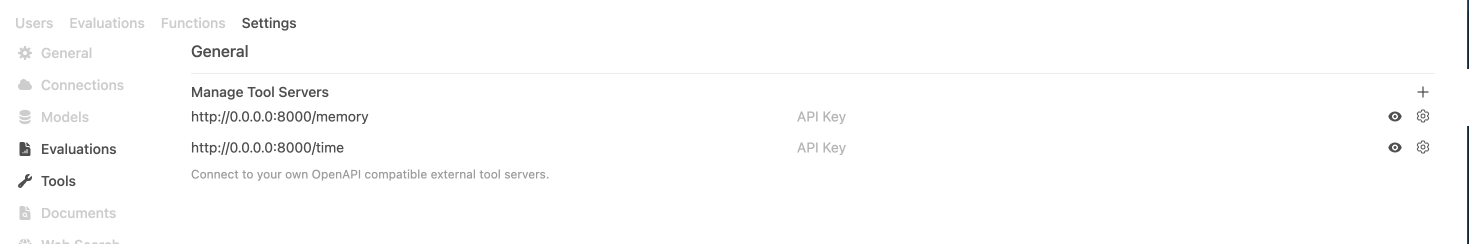

In the interface click your name and select Admin Panel. Go to Settings and select tools. Instead of adding a single endpoint for the tools, add each tool individually. If you add http://0.0.0.0:8000/ as the endpoint the interface will behave like it is working, but models will never start using it. Add each tool individually like this:

Don’t forget to save after adding each tool.

Since these tools are not enabled by default you can enable them per model. You can find these settings in: Settings -> Models -> Edit Model -> Tools. Here you can enable the tools you just added. You can also set the default tool for this model. Previously I noticed that if a model doesn’t support Tools, it will result in an error and the model can no longer be used.

Personal Settings

Another alternative is to directly set the tools on your user level.